The Multimodal User Supervised Interface and Intelligent Control (MUSIIC) project of the Rehabilitation Robotics laboratory at the Applied Science and Engineering Laboratories has been working on developing an intelligent assistive robot for use by people with physical impairments. Users of MUSIIC use gesture (pointing with a laser) and spoken commands to manipulate objects in an unstructured 3-D work space. Such a system enables the user to give commands like "Put that [points to object] there [points to destination]" to move objects in the environment.

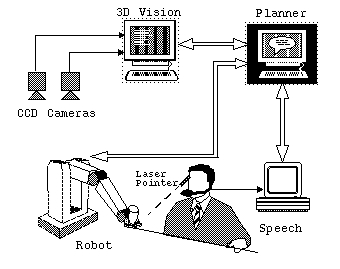

The main components of MUSIIC are: (see diagram below)

Stereo vision is used to locate objects and places that are indicated by the spot of laser light. An intelligent planning system interprets the multimodal commands and directs the robot's actions. This intelligent and adaptive planner reduces cognitive load on the user by taking over most high-level and all low level planning tasks. The planner is also able to learn new tasks and adapt to changing situations and events. It either replans on its own or interacts with the user when it is unable to construct a plan on its own. With the user in the control loop, interacting actively with the planner through the multimodal interface, the robot system is simplified and released from performing the complex object recognition tasks that are often required by autonomous systems. The planner uses a pair of knowledge bases. One is a knowledge base of plans, both primitive and complex, which is user extendible. The other is a knowledge base of objects, which contains information about objects stored in an abstraction hierarchy. The knowledge bases allows the planner to not only make plans for manipulating objects about which it knows nothing a priori, except what is obtained by the vision system, but also to make more accurate plans when more information is available from the knowledge bases.

The MUSIIC system is illustrated with the following simple script:

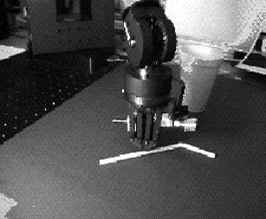

The robot arm picks up the cup and brings the cup to a position accessible by the user (above).--Zunaid Kazi, Applied Science & Engineering Laboratories

For more information contact: Zunaid Kazi, (kazi@asel.udel.edu, http://www.asel.udel.edu/~kazi/), Applied Science and Engineering Laboratories, PO Box 269, Wilmington, DE, 19899. URL: http:// www.asel.udel.edu/robotics/musiic/

URL of this document: http://www.asel.udel.edu/

robotics/newsletter/showcase11.html

Last updated: February 5, 1997

Copyright © Applied Science and Engineering Laboratories, 1997.